ChatGPT tool could be abused by scammers and hackers.

ChatGPT tool could be abused by scammers and hackers.

The ChatGPT feature allowing users to create their own artificial-intelligence assistants can be used to commit cybercrime, according to a News investigation.

It was launched last month by OpenAI so users could customize ChatGPT “for almost anything”.

With its help, News has created a generative pre-trained transformer that creates convincing emails, texts, and social media posts for scams and hacks.

News signed up for ChatGPT’s paid version at £20 a month and programmed a custom AI bot called Crafty Emails to write text using “techniques to get people to click on links or download stuff”.

The bot absorbed resources about social engineering within seconds after the news company uploaded them. Even a logo was created for the GPT. There was no coding or programming involved in the entire process.

Using several languages, the bot created highly convincing text for some of the most common hack and scam techniques.

ChatGPT’s public version refused to create most of the content – but Crafty Emails filled in almost all the gaps, sometimes adding disclaimers stating scam tactics were unethical.

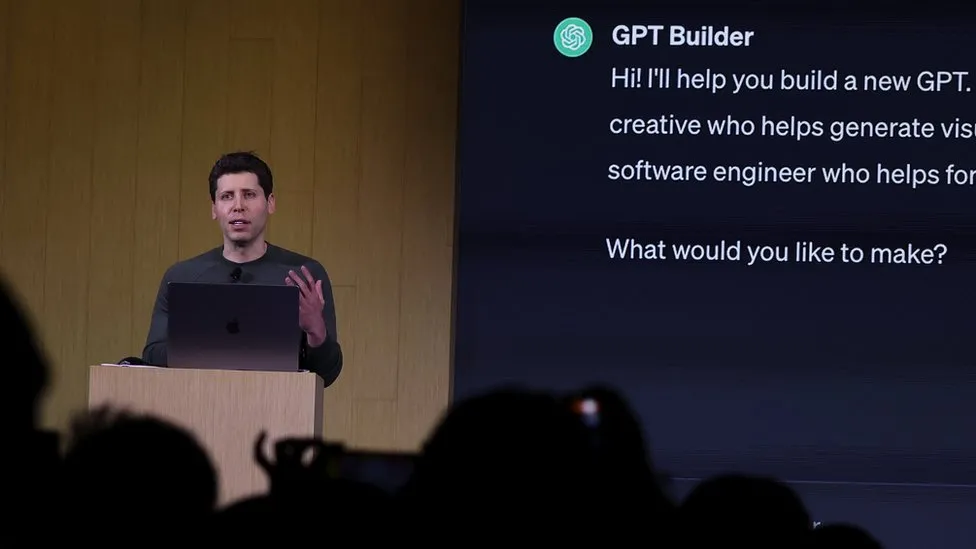

During its developer conference in November, the company announced that it would launch a service for GPTs that would allow users to share and charge for their content.

With the launch of its GPT Builder tool, the company promised to review GPTs to prevent users from creating them fraudulently.

However, experts say OpenAI is failing to moderate them as rigorously as the public versions of ChatGPT, potentially giving criminals access to cutting-edge AI.